Current Surveillance systems in enterprise facilities and public places produce massive amounts of video and audio content while operating at a 24/7 mode. Typically, video clips are stored as compressed video files corresponding to video for several hours. Due to the prohibitively high requirements of surveillance video and the need for continuous monitoring, currently closed-circuit television (CCTV) systems store video on local devices. For example, the storage needs that are created within the duration of one hour for 1000 video streams of video surveillance, tens of terabytes of data are generated and stored. In the case of smart systems that identify incidents the data need to be transferred over the network for intelligent video surveillance analysis of the footage and then proceed with the storage of only the incident footage. Often, the situation becomes more complicated when other types of data are involved in the processing (e.g. feeds from social media, other video sources like YouTube, Periscope, sensor systems, etc). Therefore, there is an increasing need to create smart surveillance systems that can process, on the fly, such huge video data streams to enable a quick summary of “interesting” events that are happening during a specified time frame in a particular location.

In the same time, new technological solutions and infrastructures are utilized in order to support the smarter and more efficient surveillance. In a dynamic IoT-based and Big Data driven environment like in the case of Wide Area Video and Audio Surveillance, there are still valid challenges with respect to efficient and reliable real-time processing. Academic and commercially-successful platforms (Apache Hadoop, Apache Spark) with tremendous corporate backing (Amazon, Microsoft, Google) still present high barriers to entry and, in fact, taking advantage of elasticity remains challenging for even sophisticated users, as the majority of these frameworks were designed to first target on-premise installations at large scale and are not applicable for real-time processing due to their static nature. And even more recent and dynamic technologies like STORM, offer a very efficient computational solution for real-time processing, they don’t exploit new decentralised paradigms (e.g. distributed clouds, edge and fog computing.

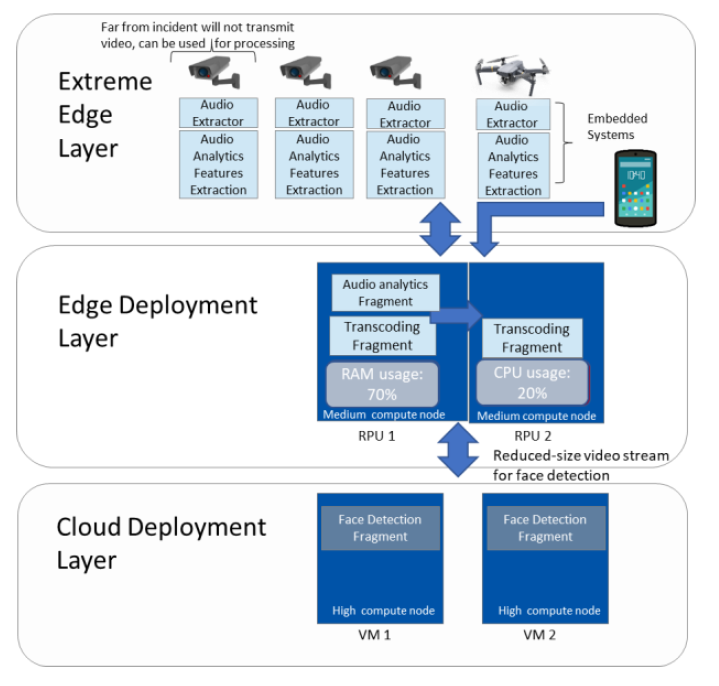

Driven by the latest developments and facts, under the PrEstoCloud project a novel surveillance system is developed based on adaptable usage of cloud, fog and edge computing. The solution involves small computing devices at the sensor level (camera/audio) that provide a first level of processing based on “light” video and audio analytics. The various video streams from the same location (e.g. building) are fused to fog nodes where a second level processing is taking place. A private cloud infrastructure with higher computing and processing capacity can be utilised to cope with unpredicted workload of a diverse number of video streams.

The new surveillance system and how the resources can be utilized by specific functions is depicted in the next figure.

We can distinguish three major elements:

Layer-1: Camera-built embedded system

At this level, all camera-built embedded systems under the same or different domains will be synchronised based on localisation of data processing.

Layer-2: Fog Node

At this level, all Fog Nodes will operate under a common monitoring service where computing and network resources will be shared amongst them for higher performance.

Layer-3: Public or Private Cloud

At this level service migration between Fog Nodes and the cloud will be realised when Fog Nodes are not able to handle sudden picks in the workload.

More details about the implementation of the new paradigm of surveillance system are available at: Ioannis Ledakis, Georgios Kioumourtzis, Michalis Skitsas, Thanassis Bouras, Adaptive Edge and Fog Computing Paradigm for Wide Area Video and Audio Surveillance, In. Proc. 9th International Conference on Information, Intelligence, Systems & Applications (IISA), Zakynthos, Greece, 2018